The Importance of XML Sitemaps for Dental Websites

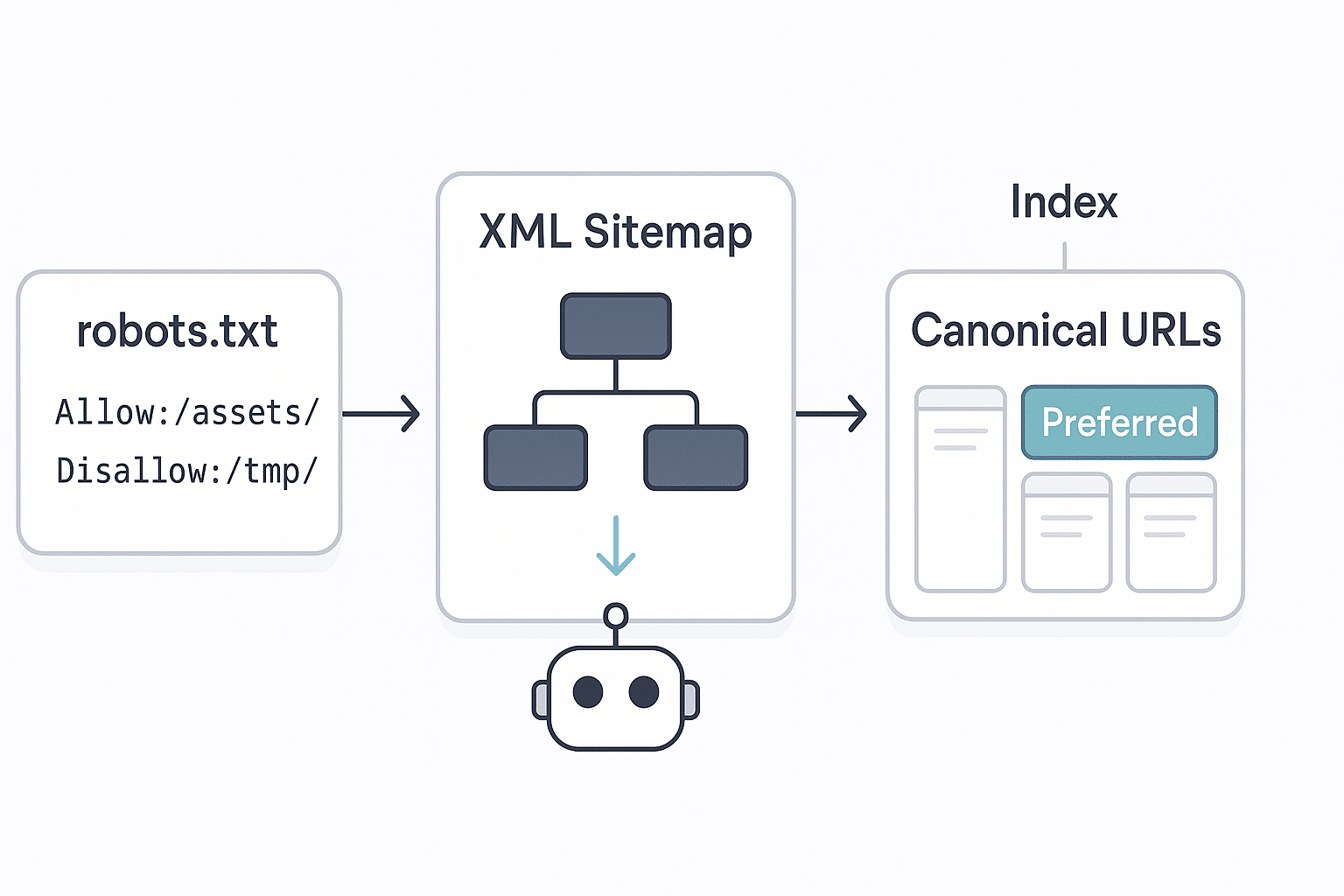

An XML sitemap is a file that lists all important pages on your dental website, helping search engines like Google crawl and index your content effectively. For dentists, having a well-structured sitemap ensures that new or updated pages—such as service pages, location pages, or blog posts—are discovered quickly, improving your site’s visibility in search results. A comprehensive sitemap also helps search engines understand your website’s structure, prioritize high-value pages, and ensure that your entire site is properly indexed, which is essential for local SEO success.

The Role of Robots.txt in Website Crawling

The robots.txt file is a simple text file that instructs search engines on which parts of your website they are allowed to crawl and index. For dental websites, this file can be used to block access to irrelevant or duplicate pages, such as admin areas or staging environments, preventing unnecessary crawling and conserving crawl budget. Proper configuration of robots.txt ensures that search engines focus on your most important content, helping your site rank higher and avoid indexing issues caused by unintended pages.

How XML Sitemaps and Robots.txt Work Together

XML sitemaps and robots.txt files complement each other to optimize search engine crawling. The sitemap provides a roadmap of your website’s key pages, while robots.txt controls which sections search engines can access. By submitting your sitemap to Google Search Console and configuring robots.txt correctly, you guide search engines efficiently through your site, ensuring that all relevant pages are indexed and irrelevant ones are excluded. This coordination enhances your SEO efforts and improves your site’s visibility in local search results.

Best Practices for Creating and Maintaining XML Sitemaps

To maximize the benefits of your XML sitemap, ensure it is comprehensive, up-to-date, and free of errors. Generate your sitemap using tools or plugins, and regularly update it whenever you add or remove pages. Submit your sitemap to Google Search Console to facilitate indexing. Avoid including low-value pages like admin or duplicate content, and prioritize your most important service and location pages. Keep the sitemap file optimized in size and format for easy crawling by search engines.

Best Practices for Configuring Your Robots.txt File

Configure your robots.txt file carefully to block access to unnecessary or sensitive parts of your website while allowing search engines to crawl your main content. Use directives like ‘Disallow’ to prevent crawling of admin pages, login areas, or duplicate content folders. Always test your robots.txt file using tools like Google’s Robots Testing Tool to ensure it works correctly. Regularly review and update this file as your website evolves to maintain optimal crawl efficiency and prevent accidental blocking of valuable content.

Benefits of Proper XML Sitemap and Robots.txt Management

Effective use of XML sitemaps and robots.txt files ensures that your dental website is thoroughly and efficiently crawled and indexed. This leads to better visibility in search results, higher rankings for your key services and locations, and increased patient inquiries. Proper configuration also protects sensitive areas of your site from unwanted crawling, maintaining your site’s integrity and optimizing your SEO efforts for long-term success.